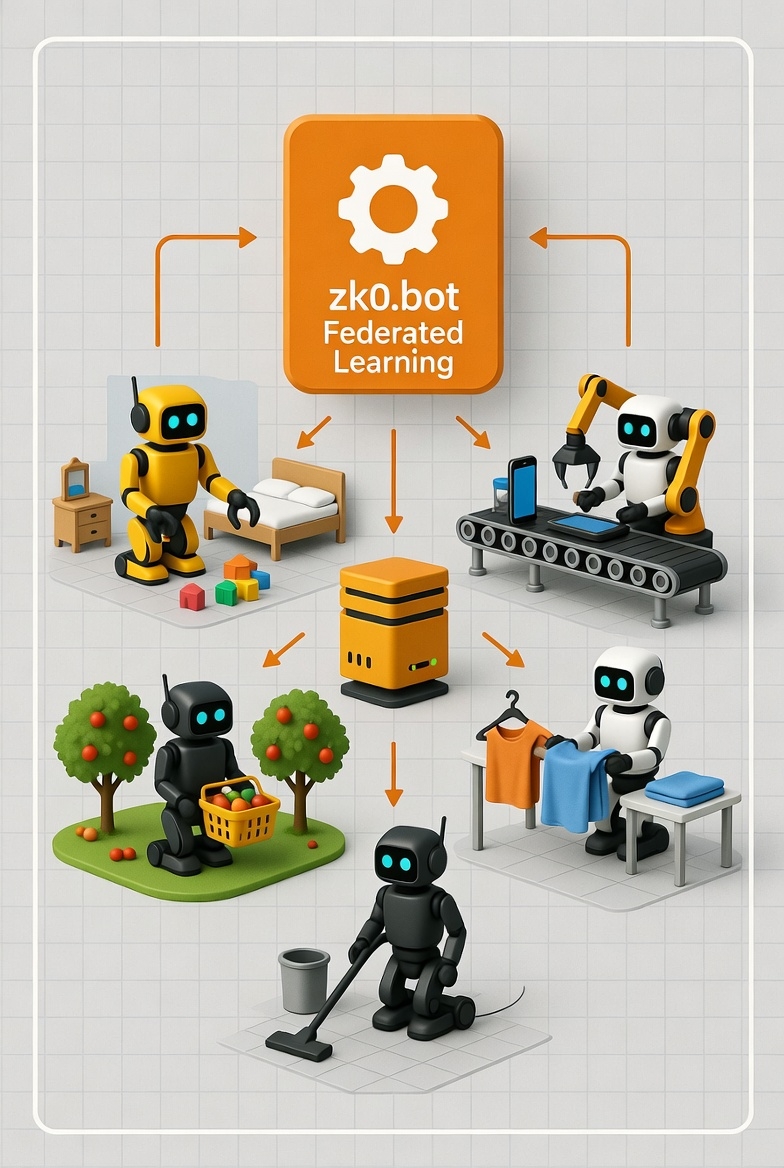

Welcome to zk0

Decentralized AI for the next generation of helpful robots

Imagine teaching robots to help around the house—like picking up toys or sorting laundry—but without sharing family videos from different homes. zk0 makes this possible by letting robots learn skills together safely and privately, just like neighbors sharing tips without showing their own photos. It's open-source technology that brings smarter, more helpful robots to everyone while keeping your data secure.

Discover zk0

zk0 is an open-source federated learning platform for training advanced vision-language-action models on real-world robotics datasets. Learn more about the project fundamentals from the white paper. Currently focused on SmolVLA with SO-100 manipulation tasks, zk0 aspires to evolve with the latest open-source ML models and advanced humanoid embodiments as they become available, enabling broader decentralized robotics AI development.

🌸 Federated Learning with Flower

- Secure, scalable training without sharing raw data

- Privacy-preserving model updates across distributed clients

- Handles heterogeneous data and devices

🤖 SmolVLA Integration

- Efficient 450M parameter models for robotics AI

- Vision-language-action capabilities for manipulation tasks

- Optimized for consumer GPUs and real-world deployment

📦 SO-100 Datasets

- Real-world manipulation tasks (pick-place, stacking, tool use)

- Diverse robotics scenarios for robust skill learning

- Community-driven dataset expansion

🚀 Production-Ready

- Docker-based deployment for easy scaling

- zk0bot CLI for node operators and monitoring

- Advanced scheduling for optimal convergence

Get Started Today

Join the decentralized robotics revolution

For Developers For Node Operators View on GitHubQuick Start

# Clone and install

git clone https://github.com/ivelin/zk0.git

cd zk0

conda create -n zk0 python=3.10

conda activate zk0

pip install -e .

# Quick check with tiny simulation

./train-fl-simulation.sh --tiny

# Or test a single dataset first

./train-lerobot-standalone.sh -d lerobot/pusht -s 10